Video encoder comparison

There has been some buzz about HTML5 web video lately. I won’t retell the story here, because it’s almost completely political and not technical, while I’m only interested in the technical side of things. One thing that struck me, though, is that many people believe that the two contenders, H.264 and Ogg Theora, are comparative in quality and performance. As someone who implements video codecs for a living, this struck me as quite odd: How can a refined version of an old and crippled MPEG-4 derivate come anywhere close to a format that incorporates (almost) all of the the latest and greatest of video compression research? I decided to give it a try and compare H.264, Theora and a few other codecs myself.

The contestants

This isn’t one of the Doom9 codec shootouts, so I wasn’t going to compare each and every implementation of H.264, MPEG-4 and other codecs of the world. I’m mainly interested in how the different compression formats compare to each other. That’s why I picked just one representative candidate for each format:

- For H.264, I’m using x264, the landmark (and, to my knowledge, only) open source H.264 encoder. The developers claim that it’s easily on par with commercial implementations and it’s likely the most popular encoder for H.264 web video.

I further subdivided H.264 into its three most common profiles (constrained Baseline, Main and High) to see how much influence the more advanced features in the higher profiles have. - For Theora, I use the official libtheora encoder, which has been greatly improved in the last year.

- Then there’s MPEG-4 Advanced Simple Profile, which used to be popular before H.264 entered the stage. I use the XviD encoder here, which is said to be the best open-source MPEG-4 encoder in existence.

- For comparison, I added the good old MPEG-2, just to see how this dinosaur compares to the new kids on the (macro)block. The are countless MPEG-2 encoders; I used the one integrated into FFmpeg‘s libavcodec, which is said to have excellent quality.

- Finally, there’s Dirac, a relatively new wavelet-based format. Like Theora, it’s completely free, so it may also be considered as an alternative to Theora if this aspect matters. There are currently two encoders, both apparently in early stages of development; I used libdirac, the official reference implementation, because the faster alternative, libschroedinger, failed to generate usable bitstreams at all.

Unfortunately, Windows Media Video 9 and its brother VC-1 are missing here – I’d really love to include them, but I conducted all of the tests on Linux and to my knowledge, there’s no VC-1 encoder for Linux yet.

Testing methodology

Now I’m going to explain how exactly my tests were conducted. If this is not interesting to you, you can skip this section altogether – but don’t complain about anything if you didn’t read it! ;)

All tests were run on Ubuntu 9.10 on a Intel Core 2 Duo E6400 CPU (3 GHz). The latest CVS/SVN/git versions of FFmpeg, MPlayer, x264, libogg+libvorbis+libtheora, xvidcore, libdirac, ffmpeg2theora and ffmpeg2dirac as of 2010-02-04 were used.

I used only one test sequence: A movie trailer for the recent »Star Trek« movie, downloaded from Apple’s movie trailer page in full HD resolution and downscaled to 800×336 pixels using a high-quality Lanczos scaler. This is one of the test cases used by Theora 1.1 main developer Monty during his optimization work. Certainly, a single sequence is not fully representative for a codec’s performance, but movie trailers are usually a good compromise because they contain a mix of high-action and static scenes.

The sequence was encoded with a target bitrates of 250, 350, 500, 700, 1000, 1400 and 2000 kilobits per second (kbps). This is a logarithmic scale: There’s a factor of roughly the square root of 2 between each step. The lower end bitrate was chosen to be an extremely low-rate test – too low for FFmpeg’s MPEG-2 encoder, which simply refused to encode the stream at this bitrate. The upper-end 2 Mbps rate is at a point where quality is expected to be nearly transparent with a modern codec.

The encoding parameters were chosen so that they represent good, but sane settings, i.e. they should still be playable on hardware. The following command lines and encoding parameters were used:

x264 --slow-firstpass --bframes 3 --b-adapt 2 --b-pyramid strict --ref 4 --partitions all --direct auto --weightp 2 --me umh --subme 10 --trellis 2 --bitrate<bitrate>--passX

This command line was used for all three H.264 profiles, but--profile baseline,--profile mainor--profile highwas appended. These options force a specific profile and if necessary, they override any other settings, like the B frame settings in Baseline profile.ffmpeg2theora --optimize --two-pass -V<bitrate>mencoder -ovc xvid -xvidencopts me_quality=6:qpel:trellis:nogmc:chroma_me:hq_ac:vhq=4:lumi_mask:max_bframes=2:bitrate=<bitrate>:pass=Xmencoder -ovc lavc -lavcopts vcodec=mpeg2video:vme=4:mbd=2:keyint=18:lumi_mask=0.1:trell:cbp:mv0:subq=8:qns=3:vbitrate=<bitrate>:vpass=Xffmpeg2dirac --multi-quants --combined-me --mv-prec 1/8 --numL1 30 --sepL1 2

Note that all tests were run using two-pass encoding, with the exception of Dirac, which doesn’t offer this option (yet?).

I’m also interested in the algorithmic complexity of the various encoders, so I measured the times required for encoding, too. Measurement was done using the wall-clock time as output from /usr/bin/time. The times for both encoding passes were then added and the time required to decode the input sequence (which was losslessly compressed) was subtracted. All tests were repeated three times and the minimum times were used. All multithreading options were turned off; in fact, I even ran the tests with a fixed single-CPU affinity mask. This was done to ensure that the CPU times represent a measure of encoder complexity without discriminating encoders that don’t have proper multi-core support.

As an additional measurement of codec complexity, the time required for decoding the clips was measured, too. Decoding was done using FFmpeg for MPEG-4, MPEG-2 and H.264; Theora was decoded by its reference decoder and Dirac was decoded with libschroedinger. In all cases except H.264, this means that a pretty fast decoder is used and the results are close to what is possible for the respective format. For H.264, the values are roughly 20% worse than what a good decoder like DivX 7 can achieve.

Objective quality measurements were conducted using the SSIM index, an image quality metric that models the human perception much better than simple metrics like PSNR or MSE do. The implementation was taken from AviSynth‘s SSIM filter with lumi masking enabled.

UPDATE [2010-02-28]: I have now uploaded the scripts used to perform the tests. Feel free to reproduce the tests on your own system and tune the parameters:

encoder_test_scripts-20100226.tar.gz (8.9 kB)

Bitrate management

Almost all encoders decently fulfilled the task of keeping the configured bitrate. x264 is particularly exact: The largest deviation I measured was just over a tenth of a percent. FFmpeg’s MPEG-2 encoder is also pretty exact, it hit all target bitrates withing one percent. Theora is not much worse, it’s results were within two percent.

XviD has more problems of keeping the bitrate – at the lower end of the bitrate range, it used up to 35% more bits than I told it to, but at the upper end, it used up to 8% less. Between 500 and 1400 kbps, the results were pretty precise, though. It seems like XviD has something like a favorite bitrate range, and everything outside that range is being pulled towards it.

Dirac consistently used a much higher bitrate than configured, from 50% at 250 kbps (making that 375 kbps) to 9% at 2000 kbps (2083 kbps).

Objective quality

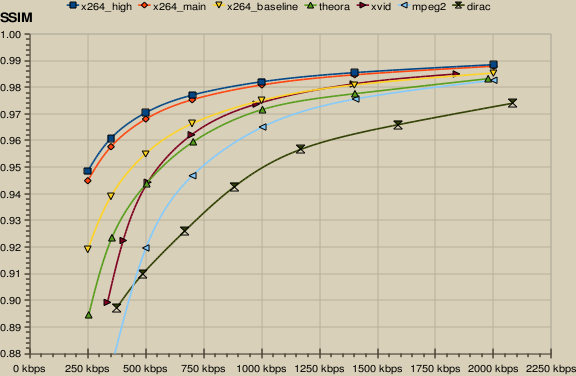

Now here’s the most important set of results from the test: The SSIM-over-bitrate graph.

This graph holds some surprises indeed. Let’s analyze it bit for bit:

- x264 High Profile and x264 Main Profile expectedly deliver the best quality by a wide margin. The difference between the two profiles is really small: High Profile is consistently a bit better, but it’s really just a tiny bit. High Profile is expected to have a more significant impact for higher-definition material, but that’s up to another test.

- x264 Baseline Profile is the third place over (almost) the complete bitrate range, but the difference between Main Profile and Baseline Profile is already considerable.

- XviD quite surprisingly gets the fourth place: At lower bitrates, it’s clearly inferior to x264 Baseline Profile, but for high bitrates, it even manages to surpass it a tiny bit.

- Theora is disappointingly bad: It’s between x264 Baseline Profile and XviD for lower bitrates and for higher bitrates, it’s closer to MPEG-2 than to XviD and x264.

- MPEG-2 is said to be still a good performer for high bitrates, and my test confirms that. At low bitrates, on the other hand … well, it’s more than 15 years old, what could you expect? ;)

- Dirac clealy shows that it’s still in development and lacks proper rate control – the only bitrate where it’s anywhere close to being competitive is at the very lowest end of the bitrate range. For all sensible bitrates, it’s even worse than MPEG-2.

Unfortunately, the abstract SSIM values are only good for comparing results against each other, but it’s impossible to say how e.g. a SSIM difference of 0.01 is going to look like. The only way to get real quantitative measurements like »encoder A is 10% better than encoder B« is to see which bitrate is required to achieve a specific SSIM value. To do this, I interpolated the SSIM results using bicubic spline interpolation (like what you see in the graph) and used the Newton-Raphson method to determine the theoretical bitrate at a few SSIM values:

| Target SSIM | 0.95 | 0.96 | 0.97 | 0.98 |

|---|---|---|---|---|

| x264_high | 261 kbps (100%) | 342 kbps (100%) | 490 kbps (100%) | 849 kbps (100%) |

| x264_main | 288 kbps (110%) | 374 kbps (109%) | 545 kbps (111%) | 939 kbps (111%) |

| x264_baseline | 438 kbps (168%) | 576 kbps (168%) | 799 kbps (163%) | 1322 kbps (156%) |

| xvid | 554 kbps (212%) | 665 kbps (194%) | 861 kbps (176%) | 1297 kbps (153%) |

| theora | 576 kbps (220%) | 713 kbps (208%) | 943 kbps (192%) | 1648 kbps (194%) |

| mpeg2 | 743 kbps (284%) | 899 kbps (262%) | 1145 kbps (233%) | 1743 kbps (205%) |

| dirac | 1009 kbps (386%) | 1283 kbps (375%) | 1840 kbps (375%) | 2452 kbps (289%) |

The percentage values show the bitrate relative to the best result, which is x264 High Profile in all cases. These results show some very clear figures: For example, x264 Main Profile consistently requires 10% more bitrate than High Profile to achieve the same quality, Baseline Profile requires around 60% more bits. XviD converges against x264 with higher qualities, starting at double the bitrate at the (quite bad-looking) 0.95 SSIM index and reaching a respectable 153% bitrate factor at the good 0.99 SSIM index. MPEG-2 is similar, but it starts at factor 3 and goes down to factor 2 with rising quality. The same is true for Dirac, which starts almost at factor 4 and ends at factor 3. Theora converges much slower and keeps around factor 2 when compared to x264 High Profile.

Subjective quality

While SSIM is a highly useful metric for objective measurement, nothing can replace real visual inspection. For the following image comparison, I selected two frames from the trailer, one of them from a high-motion scene and one from a low-motion scene. (By the way, that’s exactly the frame Monty used in one of his status reports :)

|

static scene

|

high-action scene

|

| original | x264 high | x264 main | x264 baseline | theora | xvid | mpeg2 | dirac |

|---|---|---|---|---|---|---|---|

|

original

|

500 kbps

|

500 kbps

|

500 kbps

|

500 kbps

|

500 kbps

|

500 kbps

|

500 kbps

|

|

1000 kbps

|

1000 kbps

|

1000 kbps

|

1000 kbps

|

1000 kbps

|

1000 kbps

|

1000 kbps

|

In my opinion, the subjective results confirm the objective measurements pretty accurately.

Speed / Complexity

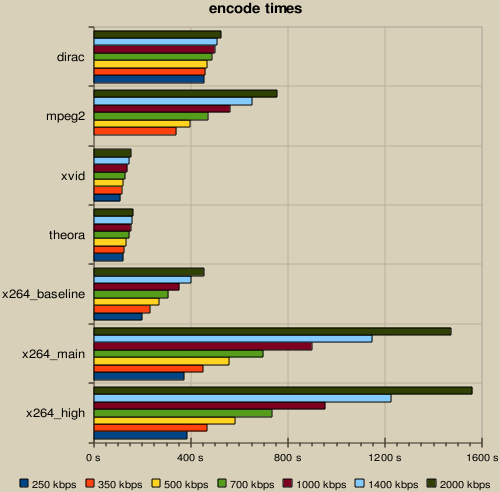

Now let’s see how much time it takes to encode video for the different formats:

This graph shows clearly that XviD and Theora are very close together and they are by far the fastest encoders around, regardless of the bitrate. x264’s speed is generally very dependent on the bitrate: In Baseline Profile, the highest bitrate takes twice the time of the lowest bitrate, for the other profiles, the factor grows to 4. Baseline Profile is also comparably fast to encode: x264 takes around twice the time in this mode when compared to XviD and Theora. However, Main and High Profile take very, very long to encode – CABAC, B-Frames and Weighted Prediction are powerful, but computationally expensive tools. Just like the SSIM values, the encoding times of these two profiles are very close together, so there’s no real reason to use Main Profile in my opinion.

FFmpeg’s MPEG-2 encoder is surprisingly slow, but given FFmpeg’s reputation, I doubt that’s because of missing optimizations – I rather think that’s due to libavcodec trying to squeeze the most out of the outdated format. Finally, there’s Dirac, which is as fast as MPEG-2 at medium bitrates, except that it’s almost the same speed over the full bitrate range.

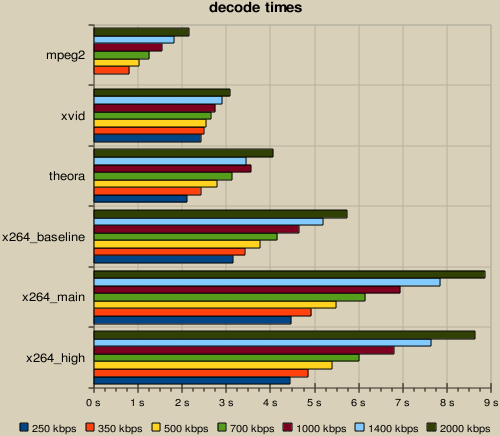

The decoding times are much less surprising: The old MPEG-2 codec is the clear winner here, followed by the also out-fashioned MPEG-4 ASP a.k.a. XviD. Theora is a tiny bit faster than this at low bitrates, but equally slower at higher bitrates. H.264 Baseline is consistently about 50% slower than Theora across the whole bitrate range, and H.264 Main and High Profiles are again 50% slower. Again, the latter two profiles are very close to each other, but this time with a little surprise: Main Profile is actually a tiny bit slower to decode than High Profile. This might be related to heavy optimization for 8×8 transform decoding in FFmpeg, but that’s just a wild guess. Mind you, other H.264 decoder may behave differently, but expect around 20% more speed from either CoreAVC or MainConcept (e.g. DivX 7), making the difference between Theora and H.264 High Profile considerably less than factor 2.

Dirac isn’t included in the graph for a good reason: Even the optimized Schroedinger decoder is more than 3 times slower than H.264 Main/High Profile …

Conclusion

My conclusion in one sentence: x264 is the best free video encoder. The H.264 format proves that it’s the most powerful video compression scheme in existence. The main competition in the web video field, Ogg Theora, is a big disappointment: I never expected it to play in the same league as x264, but even I didn’t think that it would be worse than even Baseline Profile and that it’s in the same league as the venerable old XviD which doesn’t even have in-loop deblocking. (But then again, XviD does have B-Frames, which might have made the difference here.)

The other codec that’s often cited by the »web video must be free« camp, Dirac, is an even larger disappointment: It’s not only by far the worst codec in the field, even in comparison to the more than 15 year old MPEG-2, it’s also painfully slow to decode. So I can just reiterate: If quality matters, then H.264 is the way to go, there’s absolutely no doubt about that.

Post Feed

Post Feed

libavcodec will encode much faster, and probably not much worse, without “qns=3”. Unfortunately, tuning lavc encodes is really difficult (pretty much all the options need to be changed from defaults, you’ve only changed 75% of them), so I can’t complain too much.

You might want to try “vb_strategy=2:vmax_b_frames=4:cmp=262:subcmp=262:dia=770” – the last three being magic for rd+chroma+UMH motion search.

Out of curiosity, why did manually specify x264 settings somewhere between preset slower and veryslow? –slow-firstpass in particular almost doesn’t improve quality at all but is a low slower. And dirac encoders don’t support two pass, so you can’t really compare their speed in a single pass to encoders that do two passes.

for theora, you shouldn’t try to use bitrate number at all, use quality number instead and check the quality.

setting a bitrate using ffmpeg2theora will only produce CBR files, soft-target does not help. Only quality number (1-10) seems to be able to do VBR in theora.

You should be using Schroedinger, not dirac-research. The latter is a research encoder and generally doesn’t have sane defaults. At SD sizes, Schroedinger is pretty consistently somewhere between a good MPEG-4 ASP encoder and x264.

If you had difficulties using Schroedinger, you could have asked.

(Also, “–mv-prec 1/8” WTF? I’m not surprised you got bad results.)

It’s unfortunate that you didn’t use the most current Theora encoder… The 1.1 release is known to perform poorly on SSIM, the current encoder work results in an across the board SSIM improvement of about 2dB. A marked improvement, certainly, though x264 is still a more advanced encoder (and one extensively tuned against SSIM).

Would you mind making all the files available (the input file and all your encodes?) I can provide hosting if you’re short on internet-connected disk space.

yuvi: That’s simple: I wanted to have a sane amount of reference frames (i.e. not 16, more like 4 ;)

chris: So you’re saying there’s no way to do proper bitrate-controlled two-pass encoding for Theora, at least not with ffmpeg2theora? That’s a pity.

David: I tried to use schroedinger (using

ffmpeg2dirac --use-schro), but it failed to generate any usable bitstreams. Regarding the Dirac options, I must admit that I don’t have any clue which settings make sense and which don’t, and since diracvideo.org was down when I did the test, I didn’t even have access to the spec. I used-mv-prec 1/8because for most other codecs, more sub-pel precision is a good thing – again, I didn’t know that this would hurt Dirac. You’re welcome to suggest better settings.Gregory: I used the Theora encoder from SVN trunk as of 2010-02-04, not the 1.1 release. I just assumed that this is the latest and greatest version – or should I have used a particular branch instead?

I’m planning to publish the scripts I used in an update of this post anyway. I also thought about making at least a few example encodes available here, but I’m my disk quota here is low. Do you really think it makes sense to publish all encoded streams?

@all: Thank you for your feedback (and given that the post has been online for less than half a day, I guess there will be much more soon :). If I got enough input, I might eventually do a re-test with recommended settings from the developers.

I’d somehow missed the part where you said you used the most current versions, but it sounds like I wasn’t incorrect by sheer dumb luck:

Theora development isn’t on Theora trunk, never has been except for release candidates. Current development is theora-ptalarbvorm. I suppose it’s just as well that you didn’t us it on 2010-02-04 ptalarbvorm had a bug that produced corrupted bitstreams on some inputs.

Though, it looks like some bad decisions with rate control are holding back the improvements on this clip: the SSIM improvement that I’m seeing is only 0.44dB. (Though this is with an SSIM tool which is more faithful than the AviSynth one; YMMV)

Now knowing that you’re using the most current x264— you shouldn’t be surprised to see it SSIM nailing libtheora even in baseline mode. The temporal RDO feature in current x264 (I guess it’s called “mbtree” there?) provides a pretty big performance boost which is independent of the profile used. An analogous feature has been on the TODO for Theora for a long time. x264 has about as many lines of x86 assembly as the entire size of the libtheora encoder’s C code— it’s still simply a more advanced encoder, regardless of the format qualities. Technically Theora and baseline h264 are broadly similar from a coding-tools perspective, so we should expect the performance to converge over time.

A lossless copy of the input clip and one or two representation outputs from each of the formats would be nice, at least then any differences between your results and local results could be isolated to encoding vs measurement related.

How’d you manage the 800×336 clip? The original is 1920×800. 800×336 is either cropped or distorted.

Gregory: Good to know that there’s a more up-to-date Theora branch, even though it’s very well hidden ;) If I repeat my tests at some later time (which is highly probable), I’ll use that instead of the normal Theora trunk.

Regarding the SSIM tool, I just wanted something that works and is at least a little bit respected in the community. I’m also a bit suspicious about the so-called »improvements« they made, but I didn’t find another implementation, so I just used that one.

The 800×336 clip is indeed a tiny bit distorted, but the difference in aspect ratio is just 0.8%, which is acceptable in my opinion.

You said “The [x264] developers claim that it’s easily on par with commercial implementations”. This is a very misleading statement as I think you’ll find that they, and many other people, think it is by far the best H.264 encoder available in software or hardware. The way you state it, it appears to be implied that because it is open source it must naturally be inferior.

In one recent test done by an x264 developer (on anime material) he found that x264 base profile beat every other encoder in the test including 4 proprietary H.264 encoders using their best quality settings. So saying they think their encoder is on par vastly overstates the quality of the average H.264 implementation and makes it seems odd that you’d be surprised that Theora would be in that same position.

Obviously this makes it tricky to compare H.264 with another format, when one implementation can be 4x better than another widely used one. I just think it’s a shame that Theora keeps getting called crappy, with the implication that the developers are idiots, just because one single implementation of H.264 happens to be so very good. They’re working within very different constraints, most notably one is treading carefully through the patent minefield where the other isn’t. To overlook that is like reporting on a boxing match and failing to mention that one fighter had a hand tied behind his back.

Dapper: I think you misunderstood me. It was not my intention to depreciate x264 or open source software in general. After all, I did this whole test with OSS exclusively, so how could I be against it?

I also want to distantiate myself from the »Theora is crap« camp. Even though I’m underwhelmed by its performance in my test, I absolutely appreciate the work that guys like Monty and Gregory put into it. I know that it’s very hard to put a (relatively) old format like VP3/Theora in such a good shape over such a short period of time, and the developers have done a tremendous job in doing so. (Oh, and by the way, this has nothing to do with avoiding patents – this has already been taken care of by On2 and Xiph.org in the initial specification of VP3 and Theora. The true problem is living with the restrictions of the format which are a result of patent avoidance.)

Energy efficiency of an encoder is much more important when you have achieved quality and compression that is good enough.

This is important since everything now goes mobile and you want your battery in the phone, video-camera, ipad or laptop to last as long as possible.

perrabyte: Correct. But contrary to what you might think, that’s a point for H.264, not against it: When encoded or decoded on a general-purpose CPU, H.264 is indeed slower (and thus, requires more energy) than most other codecs, except the wavelet ones (like Dirac). But hardware acceleration for H.264 is commonplace, which makes encoding/decoding again much more efficient than any pure software encoder/decoder.

Thanks for the response. Note that patents not only affect codec specifications. Much of the benefit you get after the spec is frozen is due to choosing what to encode and how many bits to use. I’m not a lawyer but I think it’s clear that a patent could easily cover these optimization techniques without it being required to implement a different, but still standards compliant, encoder or a decoder that plays back the result of either. (Apparently the On2 patents for VP3/Theora don’t cover the format itself, just optimizations.)

I realize this will sound like FUD but a complex and featureful H.264 encoder such as x264 is more likely to step on a non-MPEG-LA patent than an H.264 encoder that sticks to the basics. Do the x264 devs care? I doubt it as they don’t worry about the well known MPEG-LA patents either.

But the Theora devs have to, because that is the very reason for the codec to exist. I’m fairly certain it was mentioned on their mailing list that they needed to check out a technique they intended to add to the encoder for patent risk within the last few months, though I can’t google up a link at the moment.

Very nice article, would have been great to see vc-1 in this comparison as well. You can use the free version of microsoft expression encoder to do vc1 encoding.

Would you please share what was the length of the Star Trek clip that you used in your testing. It might make some of the other time measurements more interesting.

KeyJ:

My observation is that hardware H.264 encoding/decoding capabilities is not commonplace. My netbook certainly does not have it. Watching H.264 in any reasonable resolution is impossible. Progress is made so fast these days that when true hardware support is commonplace it is no longer relevant. We would then have moved on to a different codec. In the end it will be to costly to implement special hardware support just for a codec in my opinion.

Im in favor of using both Theora and H.264 in web video so we can have some evidence on how well hardware specific support really works out.

Considering the comments here, it would be great if you would update your findings and provide the source video you used as well. It would be especially important if they show considerable difference and would better assist people in fairly judging the value of the free codecs.

I also think the x264 settings that were used aren’t really useful if you want compare encoding speeds. By leaving away –slow-firstpass alone you almost double the encoding speed with basically no loss in quality (definitely smaller than 1%). –subme 10 can be switched out for 9 and –trellis can be left at the default for even higher speed gain, although at more quality loss. With that you’d get encoding times much closer to XviD and Theora.

–b-pyramid strict only exists for Blu-Ray compatibility and isn’t normally used for other scenarios, since it has worse quality than –b-pyramid normal. That shouldn’t have much influence on speed though.

I’m also surprised that you didn’t use either the “film” or “SSIM” tunings of x264. I’d probably have used “film” since I see the subjective quality as more important than metrics in any codec comparison.

I forgot to mention that this comparison also illustrates how you shouldn’t rely too much on metrics. For instance the 500 kbps x264 baseline encode has much lower metrics than the 1000 kbps Theora encode, but the x264 image looks more detailed. Of course a sample video would be nice how well it holds up in motion, but I guess the difference would still hold.

Honestly, for screenshot comparisons of close quality levels, it’s actually very important to make sure the frame types are the same. A p-frame will look much better than a b-frame due to the higher no. of bits allocated, so a single p-frame (say for x264 Main Profile) in the comparison could throw everything off when it’s compared to a b-frame representing x264 High Profile.

Anyway, nice test setup and results, although it looks like some refining of the encoder settings might be in order.

If you scale this webpage down so that the images are cell phone sized, then there is little difference between the original and any of the codecs, especially in action scenes. He he.

Adam: I’m trying to find a way to include VC-1 in the next round. Microsoft offers the VC-1 Encoder SDK for free, and I guess I’ll just write a simple encoder application with that and run in through WINE.

David: It’s 3239 frames at 24 fps (or was it 23.976? Please forgive me the 0.1% error ;).

perrabyte: Somehow your second comment concerns a totally different topic than the first one. The first one was about energy consumption on mobile devices, the second one was about computing power in netbooks. You’re totally right, though – not having proper H.264 acceleration in (relatively) large netbooks while every modern smartphone does have it really sucks :(

nurbs: Thanks for the input. I might even include two different sets of x264 settings in the next round: A very slow, but very high quality one, and a faster but potentially worse one.

creamyhorror: You’re right. However, it’s hard to find a frame which is encoded as a P-Frame with seven different encoders, yet is meaningful enough to make the differences visible well enough. Furthermore, P vs. B doesn’t matter all that much: Since P and B frames are (almost) alterating in most cases, encoders do their best to make sure that there are no large quality differences between them – otherwise, they would produce very annoying temporal noise. The only thing to avoid is I-Frames, because these do have a noticeable different quality level. The two frames I picked were likely no I-Frames on any encoder, by the way, because they were very close to the next scene change.

protips : X264 already got very sane preset that are very good at determining what’s “good” and what isn’t. Like your slow-firstpass probably doesn’t even bring 1% advantage compared to a fast first pass.

To use the preset put –preset ultrafast,veryfast,faster,fast,slow,slower,veryslow,placebo. The “medium” so to speak preset is when you don’t enter anything and placebo (which is the only preset using –slow-firstpass) doesn’t bring 1% of an advantage to veryslow, leave it out if you want the comparison to be timely

use –tune ssim for x264 so that it disable psycho visual optimisation that “may” hurt ssim (but provide a better visual appearance).

your command line then would work like :

CMD=”x264 –fps $FPS –preset –tune ssim –stats pass1.tmp”

I advise you also take the latest git for x264 (and maybe provide the version number in the test).

If you don’t like preset, just input –full-help and paste what you want.

Oh one last thing, the difference between high profile and main profile isn’t as big because the only thing deactivated in high profile using your settings is –8x8dct, that’s a very useful settings that is a default and only deactivated in ultrafast because of it’s usefulness, but it’s not a “complete revolution” compared to say disabling CABAC (10% or more bitrate for -free- http://akuvian.org/src/x264/entropy.png ) when you switch to baseline or no bframe etc.

last, your right about Pframe vs Bframe not mattering, an encoder should look the most consistent as possible over the encode, jump in quality in a P frame or worse an I frame will look horrendous to the viewer (for an example of an horrible encoder with a behavior like that, see the white house website live streaming codec they use, I switched to cspan website instead because my eyes were bleeding).

If your encoder jump quality between frame type, it’s doing something horribly wrong.

If I remember correctly, qpel doesn’t help in xvid save in anime.

GMC however would probably help since it’s an additional mode to the encoder (which would only be selected if it help)

Esurnir: Thanks, I already got that

--slow-firstpasswas a bad idea ;) Regarding the presets, I’m also convinced now that just picking--preset slow(or--preset slowerin combination with a sane amount of reference frames) would have been the right way to go.Regarding the differences between the profiles, I’ve already come to the same conclusions as you did (though I didn’t mention them in the article ;).

Good point about qpel, I should check that in a brief pre-test before doing the next round. GMC is out of the question though, because I see no point in generating files with features that no hardware decoder supports :)

Very good independent comparison, despite the many flaws already pointed. That is very dificult to come by nowadays, ever since Doom9 stoped his. I’m eargely waiting for an next installment with the corrections sugested.

It would be interesting that you include two sets of results, one more focused on compression, other in speed. For x264 it would be for example one with –preset slower (to see something close to maximum sane quality), and one with –prest faster, for an idea of what busy encoding farms use. But this will likely double your work and make the graphs too dificult to read… so I don’t know if it is worth for every codec. Maybe only for x264 that has an broader range of speeds.

But I’m afraid that –tune ssim may give an unfair advantage to x264, as other codecs don’t have an option to artificially tune itself to the ssim quality metric. Most of them are actually tuned based on psnr. I think that just leaving w/o any extra metric specific tune is the best.

And I don’t thing anyone would opose to dropping main vs high profile comparion in the next installment, as that will not really change from this one.

And it is likely an good idea to put the Y axis of the graphic in logaritimic scale, as the SSIM itself.

If you want a sane ammount of Reference frame (like target a certain ammount of hardware compatibility) add –level 3.1 for SD or –level 4.1 for HD, it’ll reduce the number of ref frame accordingly.

The amount of detail you went into was great, and the image comparison is amazing in it’s simplicity and it’s usefullness. This is by far the best codec comparison I’ve ever seen in my life. This article is a shiny example of the perfect and fair comparison.

I’m pretty sure it’s not fair to compare these codecs if the key intervals aren’t set the same. For example, x264 default keyint is 250, but it is 64 in Theora and you set it to 18 in MPEG-2.

R.T.Systems: The differences are fully intentional – I wanted to have the bitstream parameters of each codec to be close to the typical settings. For x264 and XviD, this means very long GOPs, for MPEG-2 it’s normal to have short GOPs (in fact, 18 frames is already quite long for MPEG-2). Regarding Theora, who am I to argue with the default settings? :) Anyway, 64 seems to be a sane value for that codec, considering it doesn’t have B frames. Moreover, I doubt that this has any serious influence anyway, because the test clip has mostly short scenes (much less than 2 seconds).

What about –speedlevel 0 for ffmpeg2theora?

You could have stood one more graph: quality vs decode-time.

A properly coded b-frame should look great, since it only builds on the progress of preceding frames. A b-frame alone is a blocky, distorted, discolored mess oddly enough.

On acceleration: Some of us may still remember when laptops shipped with DVD decoder chips, and graphics cards advertised hardware decoding.

More and more new netbooks have no trouble up to 720p, some can handle 1080p high-profile with less than 50% CPU.

The VC-1 codec is sorely missing in this list.

As a popular streaming codec it should really feature.

As a popular streaming codec it is supposed to combined high quality video with excellent client side decoding performance.

@Rob: Since the test are done on linux you would need a VC-1 open source encoder, all VC-1 encoder today are Windows only.

Beside the test were done at “offline encoding” speed. If you want a “Streaming test” you would need to have a test done so that every encoder encode at arround 30 fps.

Let’s wait and see how VP8 performs.

In my experience ffmpeg2theora is not suitable for this kind of comparisons as it produces inferior (read: awful) results. Using a Gstreamer pipeline has proved to produce much better results.

I may have missed this, but I think the conclusion misses the real issue in the whole debate.

There is no debate that an x264 video will most likely look much better than a theora encoded video. It’s more mature, etc. etc.

But that’s not the issue. It’s that MPEG-4LA reserves the right to charge end users to use their intellectual property. Theora is pretty free, if you can write an encoder/decoder you can use it anywhere. h.264 is not even remotely the same. It is owned by MPEG-4LA, and that group controls the rights. If they decide in 4 years to triple the license price for h264 encoding, then they will have the ‘right’ to.

A better comparison would be to say, look – here’s theora, and it’s free, and it’s pretty good. Here’s h264, it’s more mature and better in some ways, but at any point in the future they can charge you through the nose.

Mathias: I’d like to see that, too. But unless something miraculous happens, I guess On2 won’t make an encoder available for the public again :(

FGD: Thanks for the hint, I’ll check this. I just hope that you are not right, because I find gstreamer too complex, but that’s a matter of personal taste :)

Cary: Maybe you didn’t read the first paragraph in the post properly – it clearly states that I don’t give a f*** about that political bullshit. Sorry to be so drastic, but it’s my clear intention to keep this pointless debate out of here.

Since it is understandable you are wary about the bandwith, maybe you can upload the test clips to a file hosting site like Megaupload: http://www.megaupload.com/

When will you do a new comparison? It’s obvious from the comments that the current one isn’t all that accurate anymore…

DaVince: I guess it will be around May, but I won’t promise anything :)

Very nice, about what I expected, except that I am surprised that MPEG2 is still almost competitive relative to XVid… and it seems that benchmarks where Theora can seriously compete with X264 are most likely seriously flawed.

Btw.,if you want some relatively fast settings for X264 with decent quality, try these (with high profile):

-b 4 -m 6 -r 4 –no-chroma-me –me dia -A “p8x8,b8x8,i8x8” –no-dct-decimate –no-mixed-refs

I am not sure how they compare to XVid, but they should be only a bit slower, if at all, but with a much better quality rating at ~120% or so.

The biggest question I have is how do all these compare to VP8 now that Google is likely to open it up, and use it for HTML5.

Ross: That’s the big question, no doubt. I will include VP8 in the next round of decoder tests for sure. If the guys at On2 really did their homework, VP8 should be near x264 Baseline Profile in terms of efficiency.

This was a very nice comparison. I have been doing the same, and I come to the same conclusion as you. Theora requires about 2 times the bandwidth to reach the same quality as x264. Dirac is just useless, except for almost lossless compression.

It’s interesting to see how small the SSIM gain becomes at bitrates of over 1500kbps (for x264). I realize that you have used a downscaled sample here, but I wonder how does it look like for 720p or 1080p. People like to toss around 4000kbps as a recommended bitrate for 720p, but is it really necessary?

You have to be careful when comparing SSIM, because when you get closer to 1 smaller differences in SSIM represent relatively larger differences in quality. For instance 0.98 is twice as good as 0.96, which is twice as good as 0.94. Generally you should use 1/(1-SSIM) if you want the differences on a linear scale.

About bitrates: I generally encode my blu-rays downscaled to 720p (actually 1280*xxx; depending on the aspect ratio of the movie) with –crf 21 in x264, which lets the codec decide the bitrate based on the complexity of the video. The quality is good enough for me, and while I can tell the difference when I’m looking for it it’s nothing I’d notice when I’m just watching the movie.

With that setup I get bitrates mostly between 2 and 4 Mbps, so depending on the content and the level of quality you are satisfied with 4 Mbps is probably a reasonable bitrate for 720p if you want rather good quality on most sources. Stuff like 300 or Planet Terror will probably take more than that if you want transparency to the source. Generally grainy or old footage takes more bitrate, while the new stuff shot with digital cameras can be compressed better because there is less noise and grain.

Edit: Damn it, I meant: For instance 0.98 is twice as good as 0.96, which is twice as good as 0.92.

pafnucy: I’m already thinking about doing the next comparison in 720p anyway, so we’ll find out.

nurbs: In fact, I use

--crffor my personal encodes all the time, because it saves you the second pass without sacrificing quality – the only downside is that you don’t have any kind of direct control over the target bitrate, but since I’m usually encoding files for online distribution or hard drive storage, this isn’t an issue. For the codec comparison, however, two-pass encoding is the only way, because I want a specified target bitrate there.Yes, –crf is pretty useless for comparisons like this one, but for archiving your DVD collection on a hard disk it’s great. You can just reuse the settings for more or less every encode, maybe with a little higher CRF for high resolutions or when encoding cartoons, you save time and you get the quality you want.

A common mistake people make is picking a CRF value and then changing an option to see how the filesize changes and then using the size difference to determine how much that option changes compression efficiency. That’s because the simple explanation for CRF encoding is that it produces constant quality, but the options used change the way the rate factor is calculated. So you pick a slower option, get a larger filesize and end up being confused. This is particularly apparent if you turn of the psy-stuff, for instance by using –subme 5 and comparing it to the defaults, where you end up with higher encoding speed and lower filesize.

Nurbs: thanks for your answer, it was very informative.

KeyJ: definitely looking forward to it!

I am also curious how well animated sources are compressed. I have come across http://x264dev.multimedia.cx/?p=102, but the source used seems to have been SD as well and the output bitrate is at just 250kbps. If it’s not too much of a hassle, could you include a HD cartoon source in the next instalment as well?

For your next comparison, you should use an uncompressed video as source.

See: http://media.xiph.org/

CableCat: I’d absolutely love to use losslessly compressed video as source material for the test! But alas, there’s no proper material available that I know of. What I need for the test is:

As cool as »Big Buck Bunny« and »Elephants Dream« might be, they barely fulfill point 4 and they absolutely miss point 5. When comparing codecs, real-world images, CG and cartoons are three completely different worlds. I’d like to focus on real images, but if I was going to include CG imagery as well (I’m not sure yet), I’ll certainly include »Big Buck Bunny«, as it’s just perfect for that case.

As a lossles real world video you could use Tallship. It was used on the x264 blu-ray demo disk so I guess it’s free to use. Only problem is that it’s 1080i30 so it fails your second criterion, plus there isn’t that much action. The x264 people used avisynth to convert it to 720p60/1.001 for their demo.

Video: http://video-test-sequences.hexagon.cc/torrents

Audio: http://cid-bee3c9ac9541c85b.skydrive.live.com/browse.aspx/.Public/LadyWashington

Demo disk info: http://x264dev.multimedia.cx/?p=328

Avisynth script: http://forum.doom9.org/showpost.php?p=1394744&postcount=622

This page contains some very high quality source material:

http://www.microsoft.com/windows/windowsmedia/musicandvideo/hdvideo/contentshowcase.aspx

Here some more:

http://www.hdgreetings.com/other/ecards-video/video-1080p.aspx

Very good review. I hope you will update this every half a year but I guess it’s cumbersome. Thanks for all the information though!

Congrats on a good review. libvpx is now available, and so is an updated schroedinger 1.0.9 which would be good to add to the graph. I am more interested in 1 pass encoding for real time streaming applications so I’m trying to build my own test rig for that.

Something which confuses me about codec authors: If you develop a codec, you want to make sure it performs better than what’s already available. But they don’t seam to do that, at least its not advertised. What would be great is a standard test system where every codec can be run against a set number of ‘useful’ video clips and the output plotted on a graph. Interested?

Also I use RAW 1080p clips from http://media.xiph.org/video/derf/ which are ok for what I do at the moment. If you need minutes worth of RAW 1080p video it might be worth joining all the clips together?

I’d like to see WebM (V8) compared. now that chrome is dropping support for closed source codecs.

Very nice post, I surely love this site, keep it up.

I don’t understand one thing. You said that you were interested in algorithmic complexity of CODEC so you added times from two encoding passes and subtracted decoding time!? Why?

Stjepan: That’s not quite the case. What I do is make three encoding runs and take the minimum time. This way, I select the run in which external influences (interruptions from other processes, cold caches, swapping etc.) were minimal, because this is closest to pure CPU time, but still has a relation to real (»wall clock«) time.

Subtracting the decoding time means subtracting the time required to decode the video input for the decoder, which is the same across all encoders. This has nothing to do with the time required to decode the encoded video.

is there any chance to a new updated test? after 2 years it’s be very useful for everyone. what i really like to know which is a reasonable quality with fast encode and decode.

Very nice article. I had not ventured into codec comparisons since the days of divx/XviD

An update for 2013 would be much appreciated

Tank you

Could do this for H.265

Now, in 2016, we’ll be pleased to see an update of this excellent article, including the now new kids on the (macro)block – VP8, VP9, HEVC;

Also we’d like to have hardware h.264 implemantations (VA-API,VDPAU) compared;

Single pass “low latency” (videoconference) profile encodings also are good to compare, because many people prefer the HW encoding without taking into account how inferior is this for the quality.