nVidia driver bug?

While testing my current work-in-progress demo on my brand-new Vista-powered and GeForce-8-equipped laptop, I noticed some really strange rendering glitches. Since this was the only machine where the bug occured, so I thought it would be some bug in my code that caused incompatibilities with that particular driver version for that particular chip revision or perhaps Vista. However, a friend had the very same problem on a GeForce 7 card, Windows XP and a much older driver than the one I use on my main development PC, which has a nVidia card, too. This meant that the problem needed some serious debugging :)

The notebook still had its original junkware-infested standard Vista installation, and I didn’t want to install Visual Studio and all that stuff onto a Windows installation that was going to be killed the next day anyway. So I debugged “remotely”, that is, I started Visual Studio on my other notebook via Remote Desktop and launched the compiled executable locally. Strangely enough, the bugs did disappear! I found out that the problem only occured if the program was run locally – when run directly from a network share, everything was OK. At least for the first time; sometimes, the bug reappeared if run again. Also, the behavior was dependent on the amount of printf()s in the code, which is really strange.

Anyway, I found a stable, but unsatisfying, solution. The code that failed was a routine that loaded simple geometry data (vertex data and indices) from disk. It did so by allocating memory, loading the whole file into the newly-allocated memory block, passing the loaded data directly into an OpenGL display list (via glInterleavedArrays() and glDrawElements(), surrounded by glNewList(..., GL_COMPILE)…glEndList()) and deallocating the memory afterwards. I figured it must be a problem with my file loader routine, which is actually much more complicated than the description I just gave. By not deallocating the memory after compiling the display list, the problem went away, reliably. So I stopped debugging at this point – I knew that it was just a hack that only hid some serious bug in my memory management code (at least, so I thought), but I didn’t want to dig any deeper then. Anyway, it’s just a demo; clean programming is not a requirement there :)

During the three-hour ride from Glauchau to Hannover today, the real reason for the bug suddenly occured to me: It’s not a memory management problem, it’s a mere race condition!

Analysis

If I read the spec correctly, glDrawElements() should be an atomic operation: It should not return until all the primitives for that call have been drawn. My suspicion is that the nVidia OpenGL driver violates this constraint due to optimization: It seems that the driver tries to make use of multi-thread or multi-core facilities as good as possible, executing parts of the driver in another CPU or thread than the controlling OpenGL application. This is usually a good idea, because programmers are mostly lazy and don’t optimize for multiprocessing systems, so they can at least profit from the driver’s multi-CPU features. However, the nVidia approach might be a little bit over the top.

It looks like the nVidia driver defers glDrawElements() to the second core on multi-core processors, processing the vertices »in the background« while the application code continues to run. The problem is that the application may change the vertex data right after the glDrawElements() call: My code deallocated the data block, reallocated it (which usually yields the same address!) and loaded fresh vertex data from disk. From the viewpoint of the driver (which runs on the other CPU core) the vertex data suddenly changed during processing, so I got a weird mixture of vertices from the old and the new model.

This explanation is plausible, because it explains all of the strange behavior I saw:

- Both computers that showed the problem were equipped with dual-core CPUs of different manufacturers.

- My own development computer did not show the problem, even though it had a nVidia GPU and a (most probably) vulnerable driver. The reason: It only has a single-core CPU, so the OpenGL ICD runs in-thread.

- Another dual-core computer with ATI graphics did not show the problem.

- Execution via a network share did not show the problem in most cases, because the OpenGL driver was finished processing the vertex data before the network handshake finished.

- The second execution mostly failed because the data was loaded much quicker due to caching.

- Additional

printf()s caused the application thread to run slower, so the driver had more time to finish its processing – hence, the problems vanished when debugging output was verbose enough. - Not freeing the allocated memory solves the problem because the new vertex data will always be loaded into another, unique memory location. No vertex data will be overwritten.

Confirmation – Help needed!

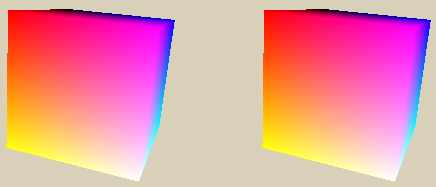

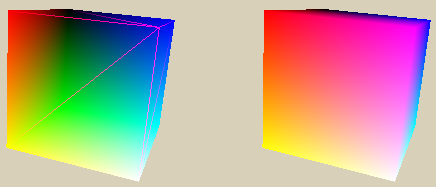

To verify my assumptions, I wrote a minimal application that exhibits the bug. It renders a hard-coded cube model (corner coordinates [-1, -1, -1] and [1, 1, 1], colors map to [0, 0, 0]…[1, 1, 1] along the axes) into a display list via glInterleavedArrays() / glDrawElements(). After that, it modifies the model data in-place by inverting all the vertex coordinates (both position and color) and fixing the vertex indices so that the resulting model will be visually identical to the original. This model will be compiled into another display list. The contents of both lists are then shown next to each other. Normally, both cubes should look exactly the same. However, on nVidia chips and multi-core CPUs, they don’t – the first model will be broken in some way because the modifications take place during the rendering.

The source code can be found here:

nvbug.c

And the executable here:

nvbug.exe

The program is available for Windows only because I already confirmed that the Linux drivers are not affected.

WARNING: This program will crash some driver versions with a bluescreen. So be warned and save your work before executing the program.

I’d love to hear from readers who tried this simple test program:

- the result

- Do the cubes look identical? (i.e. it works)

- Do they differ? (i.e. bug observed)

- Does the system crash? (I hope not :)

- Do the cubes look identical? (i.e. it works)

- the name of your graphics chip (e.g. »GeForce 8600M GT«)

- your operating system version (e.g. »Windows XP SP2«)

- your graphics driver version (e.g. »158.22«)

- your CPU type (e.g. »Intel Core 2 Duo T7300 @2.0 GHz«)

Or alternatively, if you find a bug in my code or think my assumption of the atomicity of glDrawElements() is not correct, don’t hesitate to tell me that, too.

Post Feed

Post Feed

GeForce 5900XT

Windows XP SP2

forceware 93.71

Athlon XP 2700+

It’s working.

It works as long as you turn off the threaded optimizations in the driver options panel. Many GL based demos expose this bug, e.g. “The Prophecy” by Conspiracy.

Geforce 7800GT

Windows XP SP2

Forceware 93.71

Athlon XP 3700+

working

GeForce 6150

Windows XP Professional x64

Forceware 97.92

AMD Turion 64 X2 TL-50

Bug observed.

Stumbled across this blog while I was googling for something different. Did not read all what you wrote, but two thoughts crossed my mind. I didn’t look up the exact definition of glInterleavedArrays right now, but it might just take a pointer to your vertex data without copying the data. If that is the case, it would be clear that the memory cannot be released until the very end of the application. The other gotcha that came to mind, is that there are some OpenGL commands that cannot be stored in a display list. glInterleavedArrays is one of them.

Hope that helps.

OpenGL vendor: NVIDIA Corporation

OpenGL renderer: GeForce Go 7600/PCI/SSE2

OpenGL version: 2.1.1

CPU name: Genuine Intel(R) CPU T2300 @ 1.66GHz

Cubes look different

It’s a dual core notebook indeed.

That’s right,

glInterleavedArrays()only takes a pointer that must remain valid until the next call toglDrawArrays()orglDrawElements(). It’s also correct thatglInterleavedArrays()isn’t stored in display lists, since it’s a client-side function. The problem is thatglDrawElements()doesn’t synchronize correctly. The manual page says:I read this as “your vertex data is safe when

glDrawElements()returns”. However, in nVidia’s implementation, it isn’t.What’s odd about this error is that it only seems to appear on dual-core CPUs, but not on dual-CPU machines (at leasts on the systems I’ve tested). Also, the threaded optimization is present on drivers for other platforms, but there is no way to disable the feature like there is in Windows. I hope they allow this feature to be disabled on other platforms because it causes issues in a number of OpenGL-based programs, and since other operating systems don’t have DirectX, there is no choice but to use OpenGL. There may also be some sort of conflict with the “Maximize texture memory” setting that is available on Quadro cards, but I’m not entirely sure (still testing).

Scratch what I said about it not appearing on dual-CPU machines, I just saw it happen today. It turns out that this OpenGL bug only appears on GeForce cards (actually the drivers), but not on Quadros. I just performed a few tests on dual-CPU and dual-core machines and I used Rivatuner to change the device IDs of the cards to switch between GeForce and Quadro capabilities. Even using a driver version that works for both GeForce and Quadro cards (in this case 162.62), the bug goes away when the cards are seen as Quadros and it re-appears when they are seen as GeForce, so the optimizations must be done differently depending on how the drivers see the card. I had the “Threaded optimization” setting forced to “On”, so that was not the difference.

Are you still seeing this bug? Do you ever get glErrors before incorrect rendering? And does turning off driver threading fix it?

I ask because I am getting reports of problems with glDrawElements with VBOs on NV hardware, and it seems to be coming from dual-core machines. Other programmers have complained that threaded drivers cause other sets of errors…

Ben: I didn’t test with newer drivers, but you have a good point – I should give some recent 169-series drivers a try. Anyway, I never got any glErrors and yes, turning off “threaded optimizations” does fix the problem. It’s also not surprising that you get this problem with VBOs, too; in fact, I believe that every pointer-based bulk transfer to the graphics card might be vulnerable in one way or the other. For example, during development of 8-Bit Wonderland we also saw a similar-but-different issue with ATI cards and texture data, even on single-core machines – it simply crashed! A mere

glGet(GL_SOMETHING)after the problematic operation fixed the problem, as it does for this nVidia issue.Hi KeyJ,

the 2 cubes look the same on my system. I own a Samsung R55 with a GeForce Go 7400. I’m using Windows Vista Home Premium and the driver version is the 167.45 from 12.11.2007 (with modded inf, so it runs on this device). The output of your program is like this:

OpenGL vendor: NVIDIA Corporation

OpenGL renderer: GeForce Go 7400/PCI/SSE2

OpenGL version: 2.1.2

CPU name: Intel(R) Core(TM)2 CPU T5200 @ 1.60GHz

Happy christmas and a happy new year. Keep on releasing great demos :)

I am using dual displays.

on screen 2 everything works perfect.

on screen 1 the boxes are frozen!!!

Any ideas to solve the issue?

OpenGL vendor: NVIDIA Corporation

OpenGL renderer: GeForce 8600 GTS/PCI/SSE2

OpenGL version: 2.1.2

CPU name: Intel(R) Pentium(R) D CPU 3.00GHz

okay figured it out. there is a multi display performance mode, which also enables opengl for the other display. my issue is resolved.

btw i m using xp 32bit.

GeForce Go 7400 Driver

Dated 2/20/2007

Version 7.15.10.9813

CPU T2400 @1.83Ghz

RAM: 1022 MB (that’s what Vista reports!)

OS: Vista / XP Dual Boot

Bug observed under Vista unless you turn off threaded optimizations in the driver. Bug NOT observed under XP.

I’d also like to add that our own OpenGL program FREEZES instantly under this driver when using Software Rendering regardless as to the settings in the driver. I don’t know if you can or are interested in building a software rendered version of your test… but just to add the data point…

Geforce 7800GT

opteron 165

win XP sp2

driver : dunno

pass …

same card

core 2 duo 4500

win XP sp2

forceware 175.19

pass also …

but

I figured out that one of my OGL based program crashes on the pentium. It seems that the glDrawArrays fails when

– process affinity = 3 (2 cores)

– nvidia driver threaded optimization on

fine if process affinity = 1 or 2.

I do change the vertices at each frames.

I m not expert in X86 CPUs but i think the issue comes from the vertices still in the cache of one core.

Actually, I don’t free of allocate the memory during program normal execution.

my sequence is

main()

{

set cpu affinity = 3

allocate vertices

loop

poll windows events

call render()

end loop

}

render()

{

change some 1M vertices

call glDrawArrays

swap buffers

}

Windows XP SP3

Geforce 6200 (256MB)

driver: 178.24

your program works fine

however i was led to this site once i was investigating a crash that happens in a program i use. it crashes with GlDrawArrays and not DrawElements o_O;

related?