H.264 Decoder Benchmark, Part 2

After the H.264 decoder benchmark I did when DivX 7 came out, I got some comments that I misrepresented CoreAVC by using an outdated version. Recently, I repeated the benchmarks using the newest version of CoreAVC fresh from their website. I also used more computers to test the decoders on, and the results were very interesting.

Methodology

The test sequences are the same as in the last benchmark: A high-rate CABAC 720p stream (stargazer), a low-rate CAVLC 1080p stream (nine) and a high-rate CABAC 1080p stream (masagin). I changed the tools, though: The speeds are now measured with the built-in benchmark option of Monogram GraphStudio. This eliminates any influence of the video renderer, because GraphStudio simply uses a Null Renderer (the DirectShow equivalent to /dev/null) instead.

All tests were performed on Windows XP with power management disabled. The single-core tests were done by pinning the graphstudio.exe process to one CPU core right after the start, before any video has been instantiated.

I used four different codecs for testing: FFmpeg via ffdshow r2583 (built by clsid on 2009-01-05), DivX 7.0 (Build 08_01_00_00094), CoreAVC 1.8.5.0 and (new!) Elecard’s AVC Decoder (v.2.3.81219, 2008-12-19). I’d like to include the CyberLink, InterVideo, Nero and some other decoders, too, but there are no free trial versions available. One of the test machines happend to have CyberLink’s H.264 decoder installed; quick tests showed a performance somewhere between FFmpeg and CoreAVC.

The results

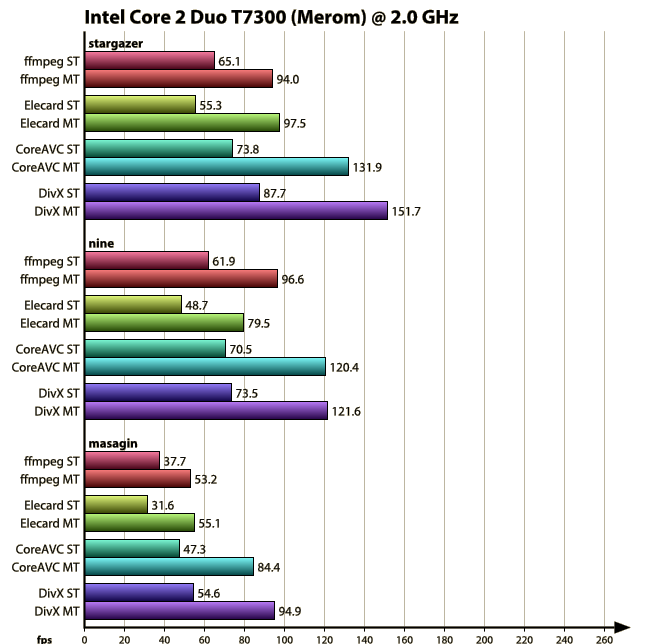

First, I ran the whole tests again on the Core 2 Duo I used for the first benchmark:

The results are consistently a few fps better than last time, which is due to the better benchmark method. Other than that, there are no differences at all: CoreAVC 1.8.5 brought no measurable improvemets compared to 1.6.0.

The Elecard decoder is a very slow one, even slower than FFmpeg, especially for CAVLC-coded sequences. But it scales better: The multi-threaded results for the CABAC streams are at least a tiny bit better than those of FFmpeg. However, it doesn’t stand the slightest chance against CoreAVC and DivX.

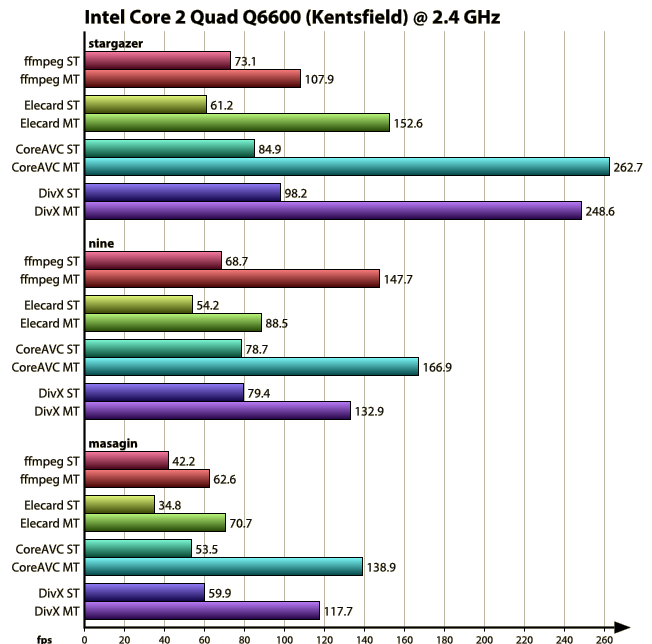

The next machine I tested the codecs on was a Core 2 Quad: Almost the same core, but now there’s four of them instead of two. The clock speed is also a bit higher, and so we get a consistent 10% improvement on the single-threaded speeds. The multi-threaded values are much more interesting, though :)

Most of the codecs only scale with the clock speed and don’t make use of the two extra cores in two sequences and scale just a bit better on the third. This »good« sequence is the stargazer one, but surprisingly, FFmpeg seems to like nine much better, even surpassing DivX’s performance there.

The only codec that consistently gains more than just a 10-20% clock speed boost and thus really makes use of the four cores is CoreAVC, which outperformed all other codecs on all sequences here. It isn’t four times the single-threaded speed, though, but with 2.5-3x, I think it’s as good as it gets.

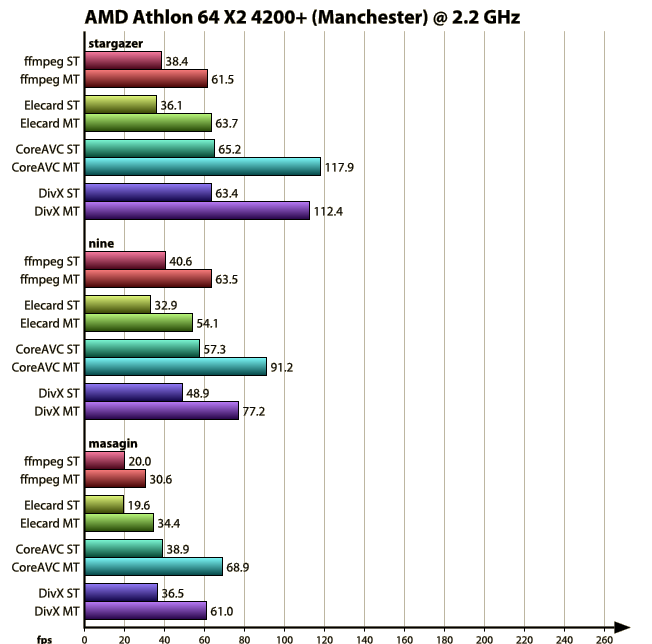

Finally, I included an AMD dual-core machine in the benchmark. It’s a little bit older than the other two boxes, so the overall speeds are much lower, but that’s not the point. While FFmpeg and Elecard perform just like I’m used to from the other machines, CoreAVC and DivX practically changed their roles: While DivX was faster in single-core or dual-core mode on the Intel boxes, CoreAVC outperforms it on the AMD chip.

Conclusions

It’s correct that I didn’t do CoreAVC justice in my first benchmark. However, that’s not due to using an outdated version. It just seems that DivX is extremely optimized for dual-core CPUs with the Intel Core microarchitecture. If you give CoreAVC more cores or another architecture like AMD’s, it shows its muscles and beats DivX easily. On a Phenom X4, CoreAVC should be really extremely fast.

So DivX might not be the fastest decoder on earth, but it’s surely the fastest free one. FFmpeg doesn’t even come close. The Elecard decoder is a mystery to me – for a whopping $40, it performs as bad as FFmpeg. Who is supposed to buy that, considering that you get CoreAVC, which can be faster on one core than Elecard on two, for just $15?

Post Feed

Post Feed

How about the image quality?? I’ve seen some improvement in CoreAVC compare to ffmpeg, but it would be good if you could tell between them.

jet.foxx: Assessing image quality is a hard thing to do. There are no objective methods to measure the quality of images, let alone video sequences. Also, I didn’t test different video filters, but different H.264 decoders. Unlike MPEG-2 and other earlier video standards, all correct H.264 decoders are required to produce exactly the same images, bit for bit. Everything else would be a bug. Of course there are bugs, but I simply assumed that all of the decoders I tested work “correctly enough” to produce images free of decoder error artifacts. Skimming over the decoded images, I also saw no obvious errors.

So, if decoder A produces different results from decoder B, this means that at least one of them is buggy or does some post-filtering it isn’t supposed to do. If you know of any real-world sequences where visible differences occur, I’d be very interested to have a closer look at them.

Is it correct that none of those decoders use hardware acceleration available on ATI and nVidia videocards ?

Someone said that Media Player Classic Home Cinema (MPC-HC) built in decoder uses hardware acceleration? Does that mean that MPC-HC FFmpeg’s libavcodec uses hardware acceleration and ffdshow doesn’t and wouldn’t the results be different then?

Dependind on decoder (Nero and MPC-HC? and ???) and video card itself obviously, but where would those hardware accelerated decoders be in this bechmark?

That’s right, all of these codecs are pure software codecs. As far as I know, Hardware acceleration requires support from the player application, a DirectShow codec alone can’t implement that.

MPC-HC has a built-in support for DXVA hardware acceleration. If DXVA isn’t available, it uses a libavcodec-based software decoder as a fallback.

I got similar benchmark results on the core2duo e8400 3.0@4.2 system with 720p movie samples. Divx7(Build 08_01_00_00094) was 19% faster than coreavc(1.9.5). Here is my results.

coreavc

User: 3s, kernel: 0s, total: 4s, real: 37s, fps: 2572.4, dfps: 278.8

User: 3s, kernel: 0s, total: 3s, real: 36s, fps: 2730.5, dfps: 285.9

divx7

User: 5s, kernel: 0s, total: 5s, real: 30s, fps: 1887.4, dfps: 336.8

User: 4s, kernel: 0s, total: 4s, real: 30s, fps: 2413.9, dfps: 336.0

I think divx7 uses duelcore intel cpu resources more efficiently.